10 things we learned from Google I/O 2024

All the tantalising announcements from Google’s Big Tech Show — aka, AI In Everything

After the biggest news from Google I/O 2024? We’ve got you covered. Having watched the keynote live while furiously scribbling notes, we’ve served up a platter of tasty bite-sized announcements below. Spoiler alert — AI is in everything.

1. Ask Photos

Google Photos is introducing a new experimental feature called Ask Photos, powered by Gemini AI models. Ask Photos allows users to search their photo library more intuitively, using natural language queries like “Show me the best photo from each national park I’ve visited.” Ask Photos also helps with tasks like curating trip highlights and generating captions — simply ask it for the best photos from your trip abroad, and it’ll instantly provide you with a curated selection of some of your best shots. And if your hats are of the tinfoil variety, Google emphasises the privacy protections in place, noting that personal data is never used for ads and is safeguarded with industry-leading security measures. The Ask Photos feature will begin rolling out to users in the coming months.

2. Veo: AI-generated videos

Image generation has already blown minds with its rapid development over recent years, and the same appears to be on the horizon for video. At I/O 2024, Google unveiled Veo — a powerful new video generation model capable of creating high-quality 1080p videos over a minute long, in various cinematic styles. Veo has an advanced understanding of natural language and visual semantics, allowing it to accurately capture the tone and details of a prompt, while sticking to the laws of physics for realistic and natural motion. Very impressive indeed. Google is collaborating with filmmakers and creators to experiment with Veo and improve how they design, build and deploy the model to best support the creative storytelling process. Veo is currently available for select creators as a private preview, with plans to bring its magic to YouTube Shorts and other apps in future.

3. Imagen 3: Google’s best text-to-image model to date

Google also unveiled Imagen 3, its most advanced text-to-image model to date, capable of whipping up highly detailed, photorealistic images with significantly fewer visual artefacts compared to previous versions. One thing we’re particularly looking forward to testing out, is the improvement in AI-rendered text within images, which is something that current models struggle with. If it works as well as advertised, it could open up a whole new world of content generation. Bespoke AI birthday cards, here we come.

4. Circle to Search rescues your homework

Circle to Search, a feature already available on various Pixel and Samsung devices, allows users to search for anything on their phone using a simple gesture without switching apps. AT I/O 2024, Google announced that it’s expanded Circle to Search’s capabilities to help students with homework directly from their mobile devices. By circling a challenging prompt, students can receive step-by-step instructions for solving physics and math word problems. Later this year, the feature will be enhanced to tackle more complex problems involving symbolic formulas, diagrams, and graphs, powered by LearnLM, Google’s new family of learning-focused models. Circle to Search is set to double its availability by the end of the year, potentially giving teachers some time to prepare for the onslaught of AI-generated homework.

5. Gemini on Android

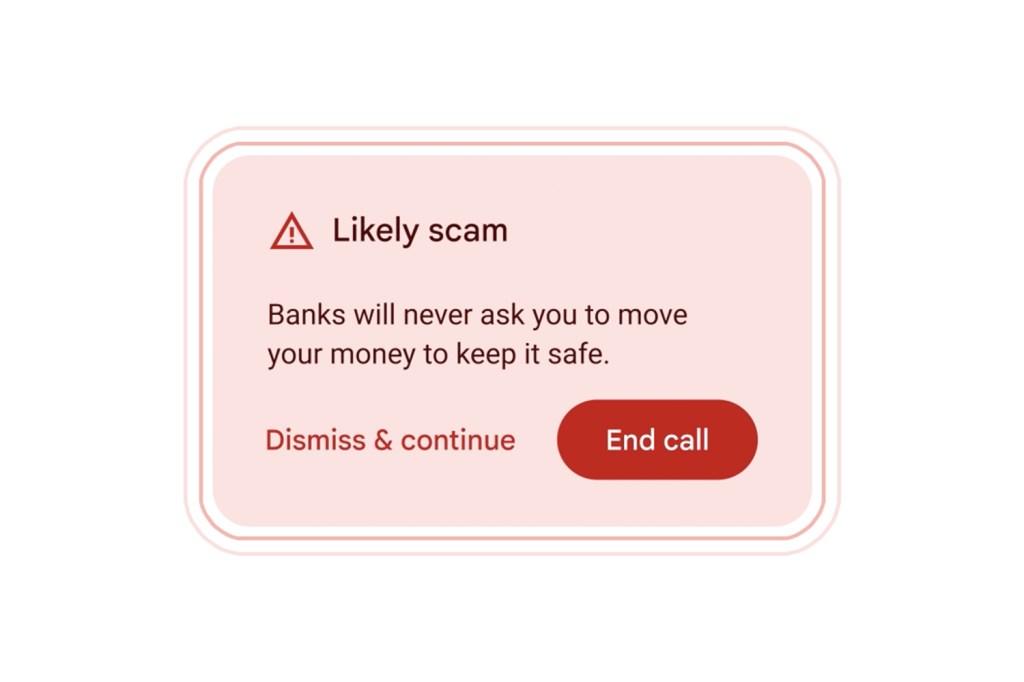

Google is also enhancing its Gemini AI assistant on Android so that it can better understand the context of what’s being shown in the current app. and the app they are currently using. This generative AI-powered experience, which is integrated into the Android operating system, is set to become more versatile and user-friendly. Soon (a specific time frame hasn’t been specified), Android users will be able to access Gemini’s overlay on top of the app they are using, allowing for seamless interaction with the AI assistant, unlocking actions like dragging and dropping generated images into Gmail, Google Messages, and other apps. Gemini Advanced subscribers will also be able to take advantage of the “Ask this PDF” feature, which automatically mines answers from PDF documents without the need to scroll through multiple pages. This update is expected to roll out over the next few months. Lastly, Google is also testing a new AI-powered feature which could detect red flags during phone calls, warning you if it sounds like the person you’re speaking to could be a scammer.

6. Gemini for Google Search

A new Gemini model, customised for Google Search, combines advanced capabilities like multi-step reasoning and planning, with Google’s existing search systems. In other words, it’s Google Search, but better. AI Overviews — a Labs feature which provides quick answers and overviews to user queries by curating sources from multiple sites — is now rolling out to everyone in the US, with plans to expand worldwide by the end of the year. Soon, users will be able to adjust AI Overviews by simplifying the language (useful for answering children’s queries), or breaking down the information in more detail. Google Search will also offer planning capabilities, starting with meals and vacations. Users can create customised meal plans and easily export them to Docs or Gmail, and later this year, additional categories like parties, date nights, and workouts will be added.

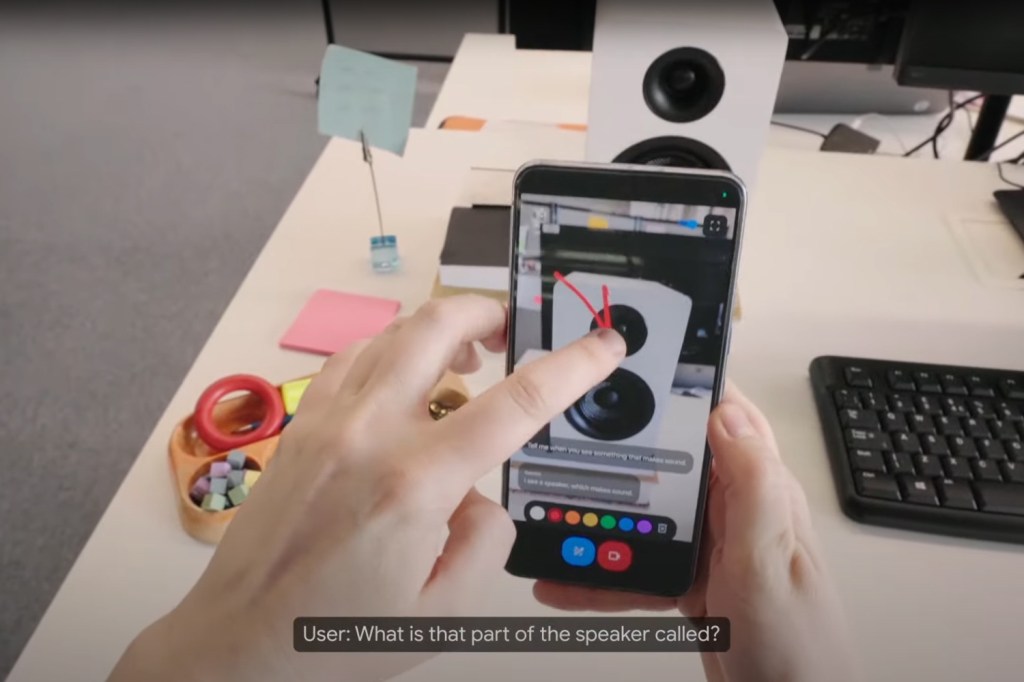

7. Ask questions with a video

Ever dreamed of using AI to search through video content? Your time has come, thanks to the new Search with video feature. One example Google provides is using the video search feature to troubleshoot a broken record player. Instead of struggling to find the right words to describe the problem, the user can simply record a video of the record player’s unexpected behaviour, such as the metal piece with the needle drifting unexpectedly. Searching with video will be available soon for Search Labs users in English in the US, and will expand to more regions in the (hopefully) near future.

8. Gemini 1.5 Pro reads all the things

Google also unveiled Gemini 1.5 Pro, its most advanced AI model, to Gemini Advanced subscribers. Its main draw is its significantly expanded context window which starts at one million tokens, making it the longest of any widely available consumer chatbot worldwide. With such a lengthy context window, Gemini Advanced can comprehend multiple large documents totalling up to an impressive 1500 pages, or summarise 100 emails. In the near future, it will also be capable of processing an hour of video content or codebases exceeding 30,000 lines.

To fully utilise this extensive context window, Gemini Advanced now allows users to upload files directly from their devices or via Google Drive. This feature enables users to quickly obtain answers and insights from dense documents, such as understanding the specifics of a pet policy in a rental agreement, or comparing key arguments from multiple lengthy research papers. It can even create custom visualisations and charts, based off of information from spreadsheets.

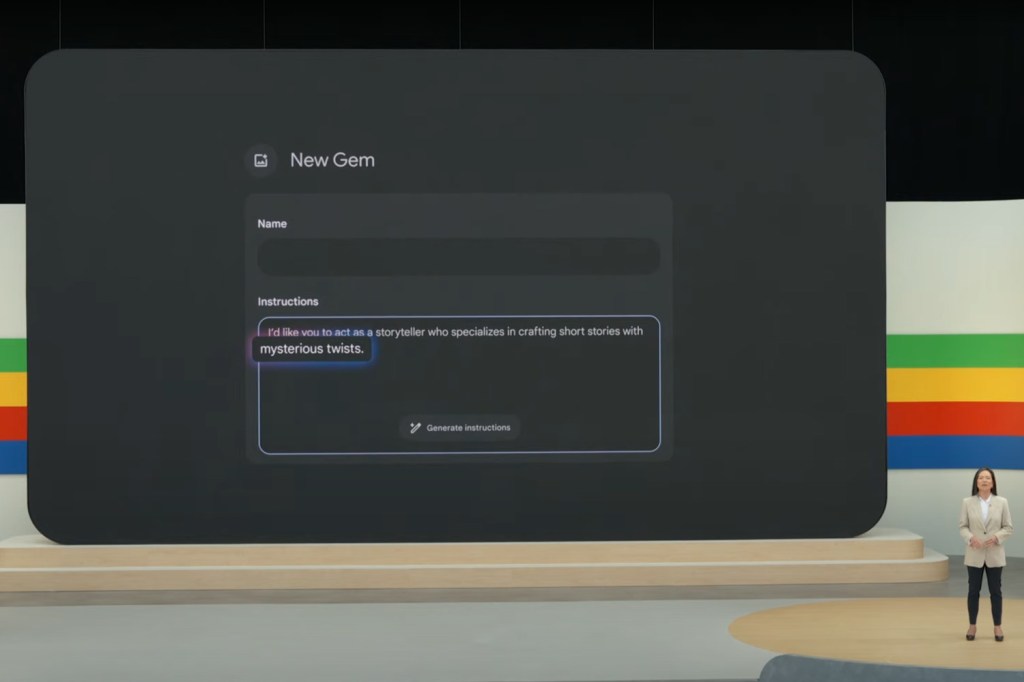

9. A real Gem

Google I/O 2024 also introduced a new feature for Gemini Advanced subscribers called Gems, which will let users to create personalised versions of the Gemini AI assistant. With Gems, users can tailor their AI companion to suit their specific needs and preferences, whether they’re looking for a gym buddy, sous chef, coding partner, or creative writing guide, to name but a few optimistic examples. Users simply need to describe what they want their Gem to do and how they want it to respond. You could, for example, request, “You’re my running coach, give me a daily running plan and be positive, upbeat and motivating.” Gemini will then take these instructions and, with a single click, enhance them to create a Gem that meets your requirements.

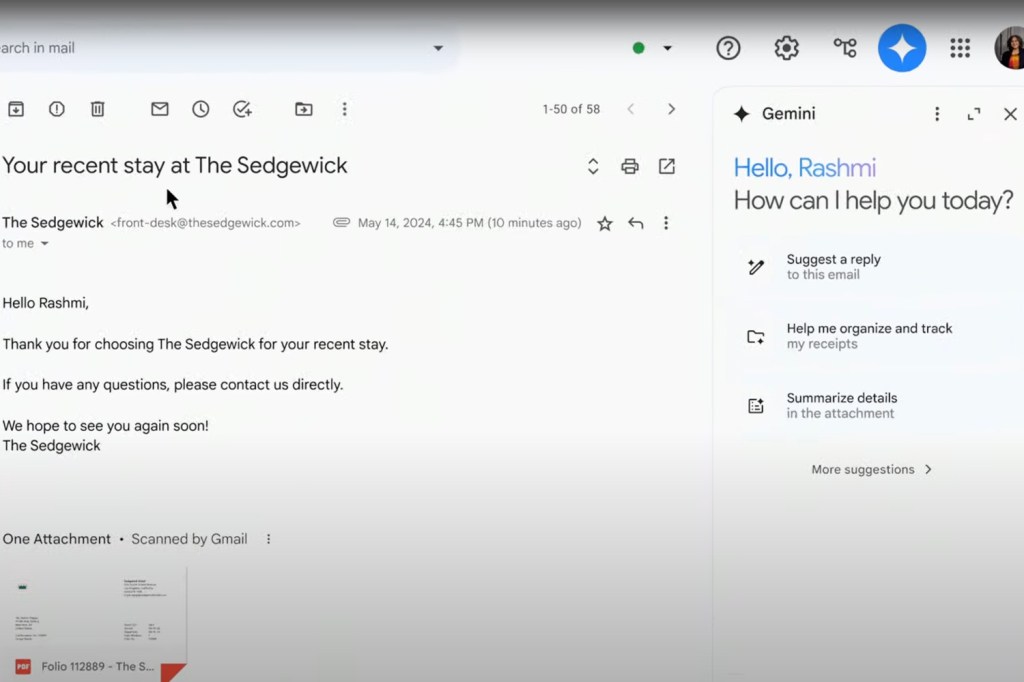

10. Turbocharged Gmail

The Gmail mobile app also has an exciting update on the way which is, you guessed it, Gemini related. The new Gemini icon in Gmail will offer helpful options, such as summarising emails, listing the next steps, or suggesting replies. Users can also use the open prompt box for more specific requests, like finding a particular document or asking for discussion questions for an upcoming meeting.