Apple isn’t releasing AI. Instead, you’re getting something better – Apple Intelligence

AI needs to be personal and secure, and that's where Apple Intelligence comes in. Things are baked right into the system

Apple just held its WWDC 2024 developers conference. At the event, the Cupertino tech giant unveiled all of its new software coming later this year. iOS 18 was the biggest announcement, alongside all of Apple’s new AI features. Rather than just giving you AI, Apple is rolling out “Apple Intelligence” – something that’s more personal. That sounds pretty wishy-washy – but it’s very impressive.

Apple Intelligence puts AI models at the heart of your device. It draws from your usage, so it’s completely personal while keeping everything private. It will be able to create language, understand images, and take action. Beyond other AI models, it gets a large dose of personal intelligence. Everything is powered by on-device processing, so your data isn’t actually collected.

Siri is Apple Intelligence’s biggest change

Leading the charge in both is a new AI-powered Siri. This long overdue update to the smart assistant that often doesn’t seem so smart adds in AI smarts. Better than giving Siri more stuff to do, it means the assistant has a better shot at understanding you. The assistant has a new look, around the edges of your device instead of in the middle. Siri will remember the context of your conversation through subsequent messages. And the option to type is always there, making Siri more like an AI chatbot.

Apple Intelligence wants to succeed where Rabbit failed – doing actions on your behalf. Requests like “Play the podcast my wife sent the other day” are now possible. And you don’t have to speak perfectly every time. If you stumble over your words, change your mind, or throw in a “Oh, wait,” Siri will now understand.

Siri can also see and understand what’s on your screen. If someone texts you their new address, you can just ask Siri to add it to their contact card. Thanks to Apple Intelligence, Siri will know exactly what you mean. You can even control Apple features and settings now, which has been a major gripe. Question about how to use a product? Siri can tell you instead of searching for it.

Siri can even move through apps with you. Questions like “Show me my photos of Stacy in New York wearing her pink coat” will now work. A follow-up request like “Make it pop” will edit the photo. You can then ask Siri to add the photo to a document, and it will. Not convincing enough? You can ask Siri, “When is mum’s flight landing?” It will find the flight from your email or messages, and give you the answer. If that’s not a helpful AI feature, I don’t know what is.

But your device is full of more features

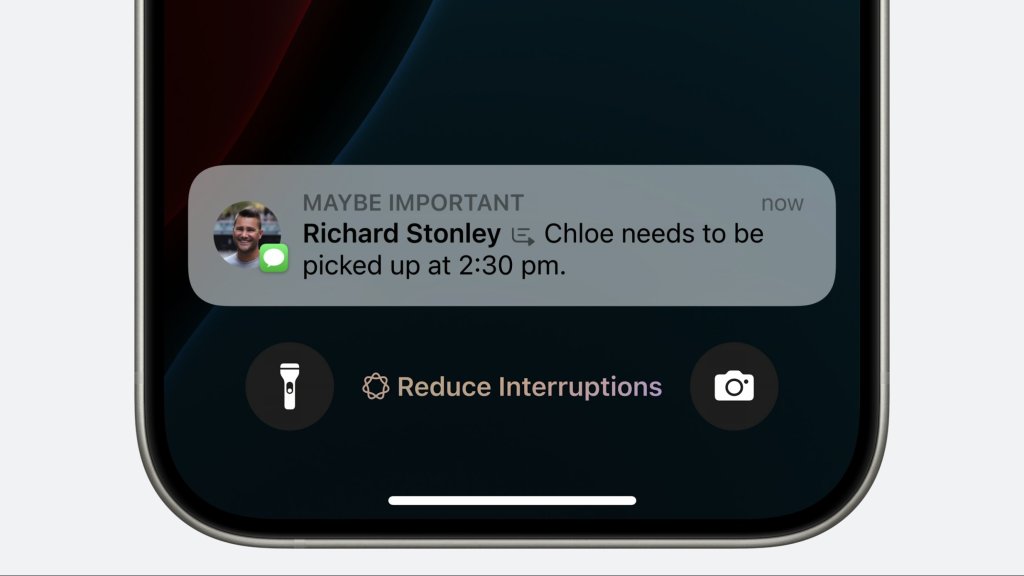

Your iPhone will now prioritise your notifications, so you don’t miss anything important. Priority notifications appear at the top, and things get summarised to help you scan them. For example, group chats get summarised, or if you need to pick your kid up, it’ll pick this out. But this goes into your apps, like Mail. Right from your email list, you’ll now see summaries of your emails that cut to the chase. Priority messages will appear at the top of your app, like a boarding pass for your trip today.

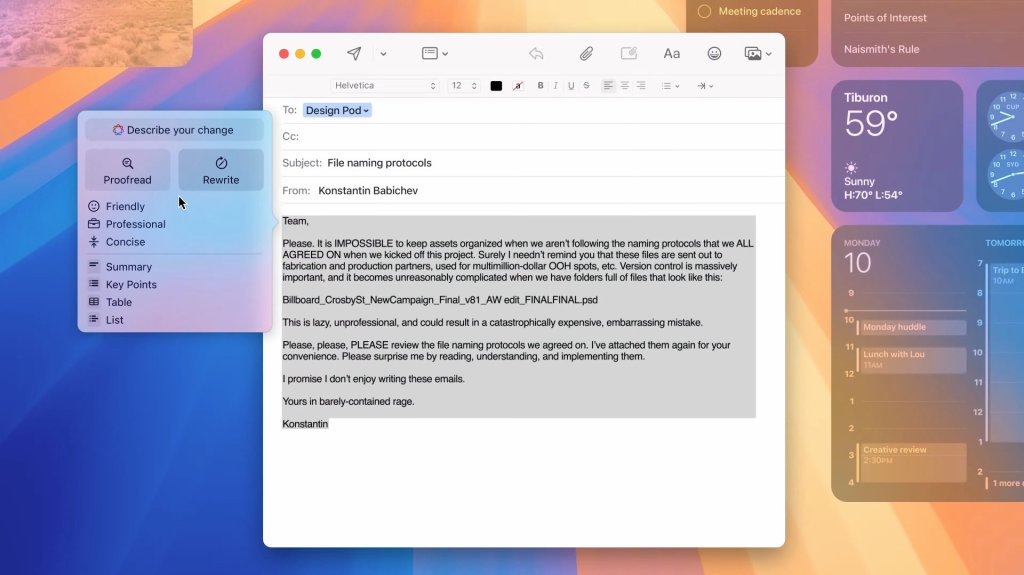

Apple’s writing apps now have features like Proofread, Smart Reply, and Rewrite – that includes Mail and Notes, alongside the Pages and the rest. Your app will be able to suggest what to write for you, based on context. Or you can write a starting point and get it rewritten or proofread. Plus, in the Notes app, you can now enjoy AI-powered transcription. This also lives in Voice Memos, where you can summaries as well. A favourite feature of mine is the ability to take your ID number from a picture and auto-fill it into a form.

Genmoji let’s you create your own emojis. From right in Messages, you can create a custom emoji from a text prompt. You can use these in any messaging app. And it lives alongside the Image Playground, where you can generate images – which is built straight into communication and productivity apps. You can pick three styles: sketch, illustration, and animation. And with the Image Wand feature in notes, you can turn a sketch into a full-blown image.

As you probably could have guessed, photo editing gets new AI features. Clean Up is similar to Google’s Magic Eraser, which can remove elements of your photos. You can also search in the Photos app using natural language. It’ll even be able to search through videos and take you to the right spot. And if you like Collections, you can now create video memories from a text prompt. It’ll suggest a narrative arc and pick a song from Apple Music.

ChatGPT officially lives in your Apple Intelligence device, without an account. Siri can tap into the AI chatbot when it needs to. It’ll work out when ChatGPT might be more helpful, and will suggest you ask it. You can include pictures, just like in the ChatGPT app. And if you want ChatGPT to generate images, it can. This is coming later this year, and will expand to supporting additional models.

All these Apple Intelligence features will work across iPhone, iPad, and Mac. Not all devices will be able to use these features, however. Since everything is processed on-device, you’ll need one of the latest models. That’s the iPhone 15 Pro series or later, and any M-chip iPad or Mac. Of course, they’ll need to run iOS 18, iPadOS 18, and macOS Sequoia. It’ll run in beta at launch in fall, and will be available to test this summer. Better yet, things are only getting better. Apple Intelligence will keep getting new features.